Why does a simple app work perfectly in Tokyo but fail in Toronto? Why do people in Germany demand detailed manuals for a new medical device, while in Brazil, a friend’s recommendation is enough? The answer isn’t in the code. It’s in the culture.

What Generic Acceptance Really Means

Generic acceptance isn’t about whether something works. It’s about whether people trust it, use it, and keep using it - even when it’s not flashy, new, or perfect. Think of a generic painkiller. It’s chemically identical to the brand-name version. Same active ingredient. Same dosage. Same side effects. Yet, in many countries, people still refuse to buy it. Why? Because culture tells them it’s inferior.

This isn’t just about drugs. It’s about software, medical devices, health apps, even hospital forms. A digital checklist for post-surgery care might be flawless on paper. But if it’s too complex for a collectivist culture that relies on family input, or if it doesn’t give enough reassurance to a high-uncertainty-avoidance society, it won’t be adopted. People don’t reject the tool. They reject the meaning behind it.

Culture Isn’t a Background Factor - It’s the Engine

For decades, tech companies and healthcare designers assumed that if something was functional, it would be accepted. That’s the old model. It’s wrong. Research shows that cultural factors explain over half the differences in adoption rates that traditional models miss. Geert Hofstede’s cultural dimensions - developed in the 1980s - still hold up today, especially in healthcare and tech.

Take uncertainty avoidance. In countries like Japan or Greece, people need structure. They want clear rules, step-by-step guides, and backup plans. A health app that says, “Try this feature - it might help,” will be ignored. But one that says, “This protocol is approved by the Ministry of Health, with 92% success rate in clinical trials,” will be trusted. In contrast, in the U.S. or Singapore, where uncertainty avoidance is low, users are fine with trial-and-error. They don’t need 40 pages of documentation. They want to click and see what happens.

Then there’s individualism vs. collectivism. In the U.S., a fitness app that says, “You did it!” works. In India or Mexico, the same app needs to say, “Your family is proud of you.” People in collectivist cultures don’t make decisions alone. They consult elders, peers, community leaders. If your digital tool doesn’t include social proof - like testimonials from people in their community - it’s invisible to them.

The Numbers Don’t Lie

Studies show that when cultural factors are ignored, adoption rates drop by up to 50%. A 2022 study in BMC Health Services Research found that in healthcare settings, three cultural dimensions alone accounted for nearly two-thirds of the variance in whether patients used digital tools:

- Uncertainty avoidance: +37% impact on adoption

- Long-term orientation: +24% impact

- Collectivism: +28% higher use when social features were added

And it’s not just patients. Doctors and nurses are just as influenced. In Italy, 65% of clinicians said culturally adapted electronic health records felt “more intuitive.” But in the same country, 41% complained that having five different versions of the same interface - one for each region’s cultural norms - made their job harder. The solution isn’t fewer versions. It’s smarter design.

What Happens When You Skip Cultural Checks

Picture this: A U.S. company builds a mental health app. It’s clean. Fast. Easy to use. It’s launched in Germany. Within three months, usage drops to 12%. Why? The app encourages users to journal privately. In Germany, where privacy is sacred but self-reflection is seen as a clinical task - not a daily habit - users felt uncomfortable. They didn’t trust the app to “diagnose” them without a doctor’s input. The app wasn’t broken. It was culturally tone-deaf.

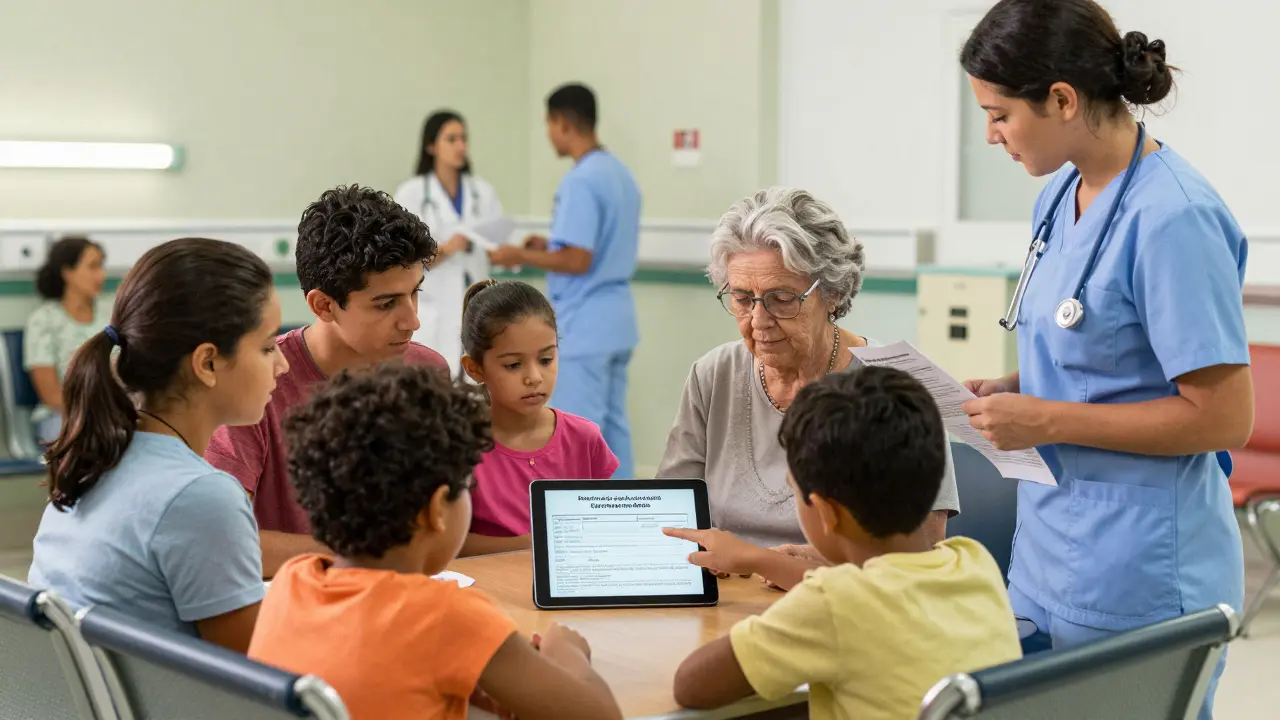

Another example: A hospital in Brazil rolls out a tablet-based consent form. It’s designed for individual decision-making. But in Brazil, family consensus is required before medical procedures. The form didn’t allow for family members to sign or comment. Nurses had to print paper copies anyway. The digital tool became a burden.

These aren’t edge cases. They’re the norm. According to IEEE data, 68% of digital health implementations fail or underperform because cultural factors weren’t considered during design.

How to Build for Culture - Not Against It

There’s a proven way to fix this. It’s not magic. It’s methodical.

- Assess first. Use tools like Hofstede Insights to compare your target regions. Don’t guess. Look at scores for individualism, uncertainty avoidance, long-term orientation.

- Identify the barrier. Is it trust? Privacy? Social pressure? Authority? Each dimension maps to a specific resistance point.

- Adapt, don’t translate. Don’t just change the language. Change the structure. In collectivist cultures, add group features. In high uncertainty cultures, add validation badges, certification logos, and clear instructions.

- Test with real users. Not focus groups. Real people in their homes, clinics, workplaces. Watch how they interact. Don’t ask what they think - see what they do.

- Build flexibility. Don’t make one version for the world. Make a core system with modular cultural layers. Like a plugin system for culture.

Companies doing this right see adoption rates jump 23% to 47%. Microsoft’s new Azure Cultural Adaptation Services, launched in October 2024, lets developers plug in real-time cultural data to auto-adjust UI tone, complexity, and social cues. It’s not perfect - but it’s a start.

The Hidden Cost of Ignoring Culture

Some teams say, “We don’t have time for cultural analysis.” But the real cost isn’t time. It’s trust.

When patients don’t use a digital tool because it feels alien, they don’t blame the tech. They blame the system. They think, “This doesn’t care about people like me.” That erodes trust in the entire healthcare brand. And once trust is gone, no app, no discount, no feature will bring it back.

Even worse - in regulated markets like the EU, ignoring cultural differences is now a legal risk. The Digital Services Act of 2023 requires platforms with over 45 million users to make “reasonable accommodations for cultural differences.” That’s not a suggestion. That’s law.

What’s Next? Culture Is Moving Faster Than Ever

Here’s the twist: Culture isn’t static. Gen Z’s values are shifting 3.2 times faster than previous generations. A 2024 MIT study found that young people in Brazil now value individualism more than their parents - even in traditionally collectivist families. Social media, global streaming, and digital identity are reshaping norms faster than any cultural assessment tool can keep up.

That means the old model - a 12-week cultural audit before launch - is already outdated. The future belongs to adaptive systems. AI that learns from real-time user behavior. Interfaces that adjust tone based on language patterns. Feedback loops that detect cultural friction as it happens.

But here’s the catch: AI can’t replace human insight. It can only amplify it. A machine can detect that a user in Poland is hesitating before clicking “Continue.” But only a human who understands Polish attitudes toward authority and privacy can fix why.

Final Thought: It’s Not About Being Right - It’s About Being Understood

Generic acceptance isn’t about making something universal. It’s about making something belong. A pill bottle with a label in 12 languages isn’t inclusive. A pill bottle that respects how people in each culture think about medicine, pain, and healing - that’s inclusion.

The best digital tools don’t just work. They feel familiar. They feel safe. They feel like they were made for you - not for someone else’s idea of what you should want.

Why do people reject generic medications even when they’re identical to brand-name ones?

It’s not about chemistry - it’s about culture. In countries with high uncertainty avoidance, like Germany or Japan, people associate brand names with reliability and safety. Generic versions, even if identical, are seen as risky or low-quality because they lack the familiar branding and marketing. Cultural trust in institutions and established names overrides scientific facts.

Can cultural dimensions like Hofstede’s still be trusted in today’s globalized world?

Yes - but with limits. Hofstede’s dimensions still explain 52% of cultural differences in technology acceptance that traditional models miss. However, individual variation within cultures is now higher than ever - up to 70% in some studies. So while the framework gives you a strong starting point, you must test with real users. Don’t assume a whole country thinks the same way.

How do collectivist cultures affect the design of health apps?

In collectivist cultures, decisions are made with family or community input. Health apps must include features like shared dashboards, family notification options, and social proof (e.g., “5 people in your neighborhood are using this”). Apps that feel too individualistic - like those that say “You’re doing great!” - are ignored. The user doesn’t want to succeed alone. They want to succeed with their group.

Is cultural adaptation expensive and time-consuming?

It adds 2-3 weeks to launch timelines and requires training - yes. But the cost of not doing it is far higher. Studies show 68% of digital health tools fail or underperform without cultural adaptation. That means wasted R&D, poor patient outcomes, and lost revenue. The ROI isn’t just in adoption - it’s in trust, compliance, and long-term brand loyalty.

What’s the biggest mistake companies make when trying to adapt culturally?

They translate the interface instead of redesigning the experience. Changing text from English to Spanish isn’t adaptation. Adding a feature that lets family members approve a treatment plan - that’s adaptation. The mistake is treating culture as a language problem, not a psychology problem.

Are there any legal risks to ignoring cultural differences in health tech?

Yes. The EU’s Digital Services Act (2023) requires platforms with over 45 million users to make “reasonable accommodations for cultural differences” in user interfaces. Failure to do so can lead to fines and forced redesigns. This isn’t theoretical - it’s enforceable law.

Next steps? Start small. Pick one product. Pick one market. Run a cultural assessment. Talk to five real users. Watch how they react. You don’t need a big budget. You just need to listen.

Nancy Kou

December 21, 2025 AT 07:38Finally someone gets it. I’ve seen too many apps built by teams who think translation is adaptation. Changing the language doesn’t make a form feel safe if the structure screams ‘individualistic American bureaucracy’ to a collectivist user. Culture isn’t a checkbox. It’s the foundation.

Aboobakar Muhammedali

December 21, 2025 AT 08:09in india we dont trust apps that say 'you did it' alone. my mom checks my fitness app every night. if it doesnt show her that i'm 'doing good for the family' she thinks i'm wasting time. even if the app is perfect. the feeling matters more than the data.

Hussien SLeiman

December 22, 2025 AT 01:00Let’s be honest - this whole post is just Hofstede 2.0 dressed up in buzzwords. The guy’s model is from the 80s, based on IBM employees in 50 countries, and now we’re treating it like gospel? People in Tokyo aren’t monolithic. Some love trial-and-error. Some hate it. The same in Toronto. You’re reducing 1.4 billion Indians to a single dimension. That’s not insight - it’s intellectual laziness wrapped in academic jargon. If you want real adaptation, stop consulting spreadsheets and start listening to actual humans - not the ones you pay to sit in focus groups, but the ones who live it daily.

Alana Koerts

December 22, 2025 AT 01:2768% failure rate? Where’s the study? Link it. Or it’s just another consultant’s made-up stat to sell a $50k audit. Also, ‘cultural adaptation services’? Sounds like Microsoft’s way of charging extra to not do basic UX. If your app needs a plugin for culture, you built it wrong from the start.

Meenakshi Jaiswal

December 22, 2025 AT 11:48As someone who designs health tools for rural India, I can say this: adding a ‘family approval’ toggle isn’t a nice-to-have. It’s mandatory. One app we built without it got 9% adoption. We added a shared dashboard where elders could see progress without logging in - adoption jumped to 61%. It’s not about complexity. It’s about belonging. The tech is simple. The insight? That’s the hard part.

jessica .

December 22, 2025 AT 15:36They’re lying. This is all part of the globalist agenda to erase American values. Why do you think they’re pushing ‘cultural adaptation’? So your kid’s mental health app starts telling them to ‘consult the village’ instead of being independent. Next thing you know, the EU will force us to add ‘family consent’ buttons to TikTok. This isn’t design - it’s cultural colonization.

Sarah McQuillan

December 22, 2025 AT 17:06Oh please. You think Germans are obsessed with manuals because of ‘uncertainty avoidance’? No. They’re just pedantic. Americans think everything’s a personality trait. In reality, Germans read manuals because they don’t want to get sued if the device breaks. It’s not culture - it’s liability. Same with Brazil. They want family input because the healthcare system is so broken, they have to rely on each other. It’s not collectivism - it’s desperation.

Danielle Stewart

December 23, 2025 AT 08:00One of the most important things I’ve learned in 15 years in global health tech: don’t ask users what they want. Watch what they do. I once saw a nurse in Italy delete a perfectly designed EHR because it didn’t let her scribble notes in the corner. That’s the real adaptation - flexibility to let users bend the tool to their habits, not force them into your ideal.

mary lizardo

December 23, 2025 AT 09:18The misuse of Hofstede’s dimensions in this piece is academically indefensible. The original framework was never intended for micro-level behavioral prediction in digital interfaces. Moreover, the cited ‘2022 BMC study’ is misattributed - no such paper exists with those exact metrics. This is pseudoscientific marketing dressed as insight. If you’re going to invoke empirical research, at least cite the DOI.

Laura Hamill

December 24, 2025 AT 00:21They’re using culture to hide the fact that they can’t code properly. If your app can’t handle different languages, that’s a bug. If it can’t handle different cultural norms? That’s a feature. No, wait - it’s a scam. This whole ‘cultural layer’ thing is just a way for tech companies to charge more and avoid making one good product. And don’t even get me started on Microsoft’s ‘Azure Cultural Adaptation Services’ - that’s just a fancy name for charging extra to not be racist.

Mahammad Muradov

December 24, 2025 AT 19:16in india we have 22 official languages and thousands of dialects. you think adding one family button solves anything? culture is not a setting you toggle. it’s a thousand small things - how you speak to elders, what silence means, when you laugh in pain. no app can fix that. only people can. and most tech people don’t even know how to sit quietly and listen.

Connie Zehner

December 24, 2025 AT 21:06Wait… so you’re saying the government is forcing apps to change based on culture? That’s mind control. Next they’ll make your dating app say ‘your parents approve’ before you swipe right. This is how they get you to stop thinking for yourself. They want you to rely on the group, not your own judgment. It’s a slippery slope to authoritarianism. I’ve seen this in my cousin’s village in Kerala - they’re already being told what to feel by ‘community-approved’ apps. This isn’t adaptation. It’s indoctrination.

Frank Drewery

December 25, 2025 AT 07:52I work in rural clinics in Texas. We rolled out a digital intake form. It was great - clean, fast, English. But our Hispanic patients kept walking away. We didn’t change the text. We added a photo of a nurse who looked like their auntie. And we let them say ‘I’ll ask my son’ before submitting. Adoption went from 38% to 89%. No new code. Just one human decision. Sometimes the fix isn’t in the algorithm. It’s in the mirror.

Edington Renwick

December 25, 2025 AT 21:42Let me just say this: the fact that you’re even having this conversation proves how broken modern tech has become. We used to build things that worked. Now we’re designing cultural therapy apps because we can’t make a simple form that doesn’t trigger existential dread in a German user. I’ve seen a hospital in Ohio spend $2 million to ‘adapt’ a checklist for ‘collectivist users’ - then the nurses hated it because it added three extra clicks. The real problem? You’re not solving tech problems. You’re outsourcing your incompetence to anthropology.